A quorum server in

However, if more catastrophic faults occur (for example, the loss of all possible network paths), the everRun system attempts to determine the overall state of the total system. The system then takes the actions necessary to protect the integrity of the guest VMs.

The following examples illustrate the system's process during a catastrophic fault.

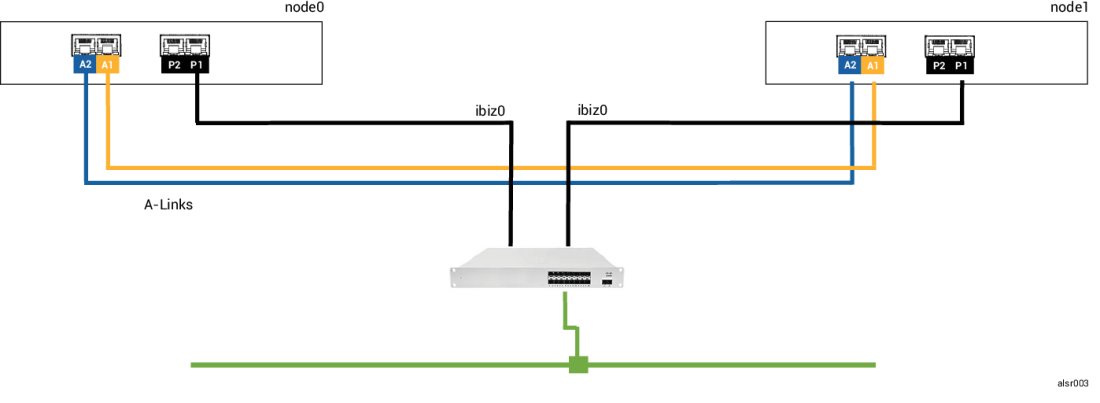

In this SplitSite example, the everRun system includes node0 and node1, but does not include a quorum server. Operation is normal; no faults are currently detected. The two nodes communicate their state and availability over the A-Link connections, as they do during normal (faultess) operation. The following illustration shows normal connections.

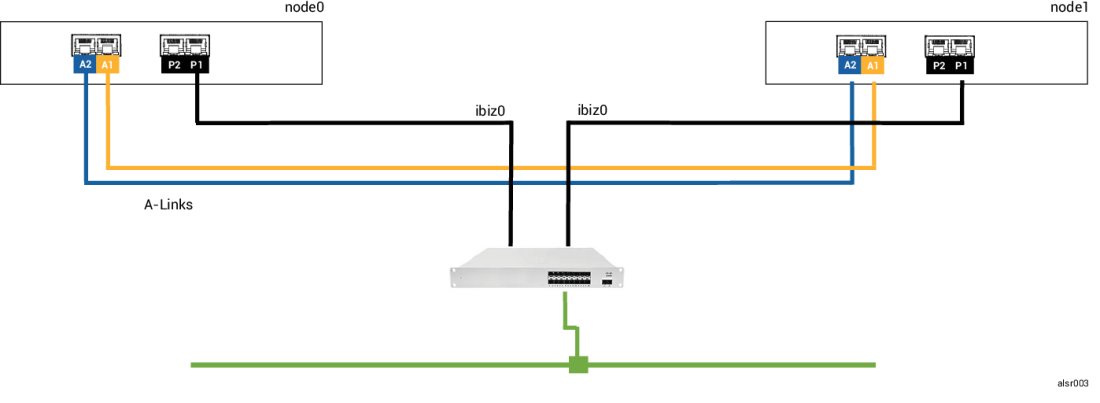

A careless fork-truck operator crashes through the wall, severing all of the network connections (both business and A-Links), while leaving the power available and the system running. The following illustration shows the fault condition.

The two nodes handle the fault, as follows:

From the perspective of an application client or an external observer, the guest VMs are both active and generate network messages with the same return address. Both guest VMs generate data and see different amounts of communication faults. The states of the guest VMs becomes more divergent over time.

After some time, network connectivity is restored: the wall is repaired and the network cables are replaced.

When each AX of the AX pair realizes that its partner is back online, the AX pair with the fault handler rules choose the AX that continues as active. The choice is unpredictable and does not include any consideration for which node's performance was more accurate during the split-brain condition.

After a split-brain condition, the system requires several minutes to resynchronize, depending on how much disk activity needs to be sent to the standby node. If several guest VMs are running with different Active nodes, synchronization traffic may occur in both directions.

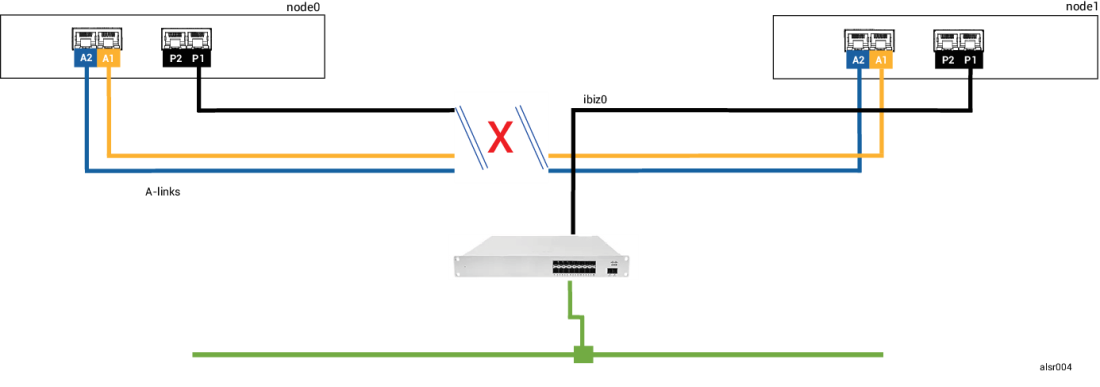

In this SplitSite example, the everRun system includes node0 and node1 with connections identical to those of the system in Example 1. In addition, the system in Example 2 includes a quorum server. The following illustration shows these connections.

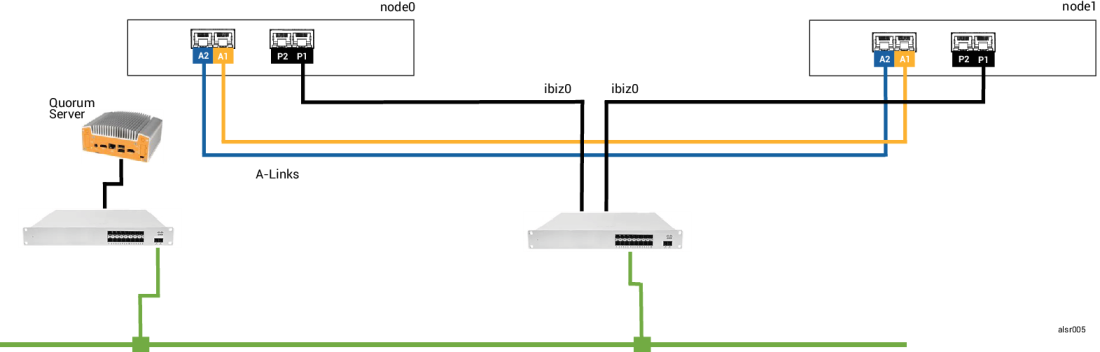

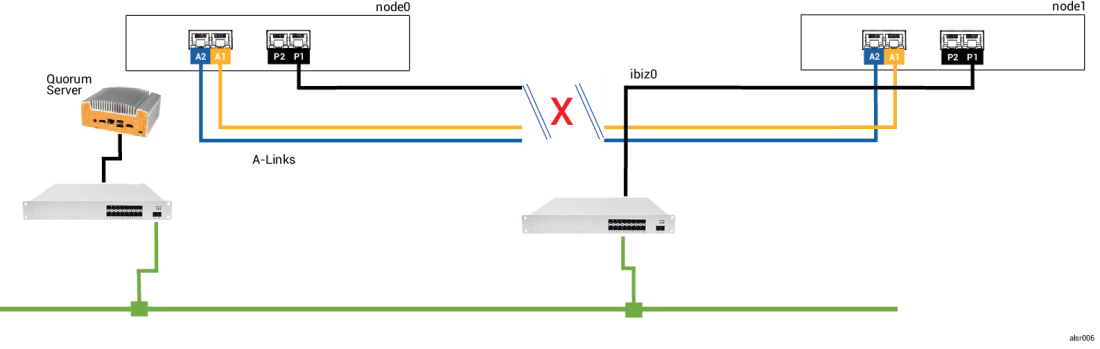

That careless fork-truck operator crashes through the wall again, severing all of the network connections while leaving the power available and the system running. The following illustration shows the fault condition.

The two nodes handle the fault, as follows:

From the perspective of an application client or an external observer, the guest VM on node1 remains active and generates data while the VM on node0 is shut down. No split-brain condition exists.

After some time, network connectivity is restored: the wall is repaired and the network cables are replaced.

When the node1 AX realizes that its partner is back online, the node0 AX becomes Standby. Because node0 was not previously running, data synchronization begins from node1 to node0.

The system requires a few minutes to resynchronize, depending on how much disk activity needs to be sent to the standby node.

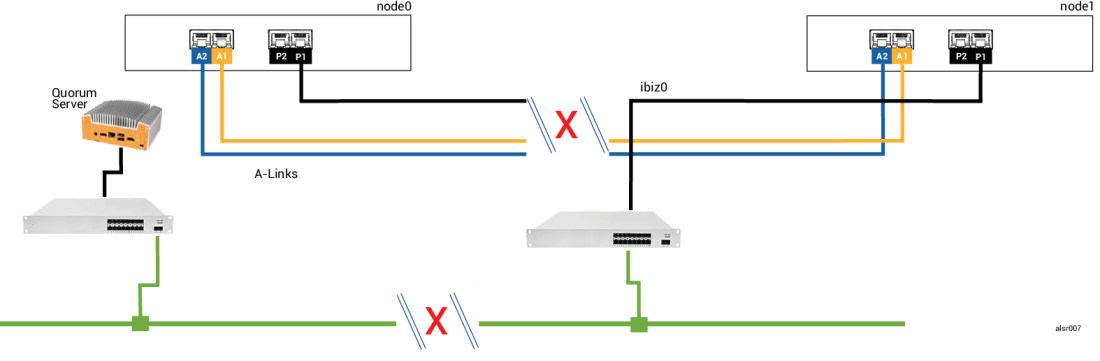

In

The fault handling is similar to Example 2 fault handling, with one important difference for node1:

In this case, the guest VM is shut down on both node0 and node1, preventing split-brain from occurring. The tradeoff is that the guest VM is unavailable until the connection to either node0 or to the quorum server is restored.

In this case, determine the node that you do not wish to operate and power it down. Then, forcibly boot the node that you wish to operate, and then forcibly boot the VM. For information on shutting down a VM and then starting it, see Managing the Operation of a Virtual Machine.)

In some situations, the quorum server might be unreachable even without a catastrophic physical failure. One example is when the quorum computer is rebooted for routine maintenance such as applying an OS patch. In these situations, the AX detects that the quorum service is not responding and so the AX suspends synchronization traffic until the connection to the quorum server is restored. The guest VM continues to run on the node that was active when the connection was lost. However, the guest VM does not move to the standby node because additional faults may occur. After the quorum service is restored, the AX resumes synchronization and normal fault handling, as long as the connection to the quorum server is maintained.

If you restart the system after a power loss or a system shutdown, the everRun system waits indefinitely for its partner to boot and respond before the system starts any guest VMs. If the AX that was previously active can contact the quorum server, the AX starts the guest VM immediately without waiting for the partner node to boot. If the AX that was previously standby boots first, it waits for its partner node.

If the system receives a response from either the partner node or the quorum server, normal operation resumes and the VM will start, subject to the same fault handler rules that apply in other cases.

If the system does not receive a response from the quorum server, or if the system does not have a quorum server, then a person must forcibly boot a guest VM, which overrides any decisions made by the AX or the fault handler. You must ensure that two people do not forcibly boot the same guest VM on node0 and node1. Doing so inadvertently causes a split-brain condition.